Difference between revisions of "Architecture"

(remove TOC and edit links) |

(replace bad characters with simple apostrophe) |

||

| Line 1: | Line 1: | ||

== Introduction == | == Introduction == | ||

| − | In this paper we will look at the methods and architecture for serving a MediaWiki instance that is performant, scalable, and redundant. | + | In this paper we will look at the methods and architecture for serving a MediaWiki instance that is performant, scalable, and redundant. We'll also touch on the related operations that may be used to make a complete picture of the organization IT infrastructure. On the implementation side, we will be using the traditional GNU/Linux Free Software components: Linux, Apache, MySQL, PHP (LAMP), Squid/Varnish, LVS, Memcached, Nginx, etc. You won't need this information to run a single small wiki. But you will need this information if you aspire to provide a large-scale, performant, enterprise wiki.<ref>Mark Bergsma presented 'Wikimedia architecture' in 2008. http://www.haute-disponibilite.net/wp-content/uploads/2008/06/wikimedia-architecture.pdf Although the material is now dated, it provides a clear example of running an architecture that supports 3Gbits/s of data traffic and 30,000 HTTP requests/s on 350 commodity servers managed by 6 people. |

</ref> One goal of this paper is to update the information at [[mw:Manual:MediaWiki architecture]]. | </ref> One goal of this paper is to update the information at [[mw:Manual:MediaWiki architecture]]. | ||

| Line 51: | Line 51: | ||

In a standard web application architecture, the incoming user traffic is distributed through load balancers to a number of application servers that run independent instances. These application servers access a shared storage, a shared database and a shared cache. This architecture scales well up to a 6 figure number of users. The application servers are easy to scale because doubling the number of servers doubles the performance. | In a standard web application architecture, the incoming user traffic is distributed through load balancers to a number of application servers that run independent instances. These application servers access a shared storage, a shared database and a shared cache. This architecture scales well up to a 6 figure number of users. The application servers are easy to scale because doubling the number of servers doubles the performance. | ||

| − | But scalability limitations can be found in the shared components. These are the load balancers, the database, the storage and the cache.<ref>Nextcloud introduces an architecture called | + | But scalability limitations can be found in the shared components. These are the load balancers, the database, the storage and the cache.<ref>Nextcloud introduces an architecture called 'Global Scale' that is designed to scale to hundreds of millions of users. https://nextcloud.com/blog/nextcloud-announces-global-scale-architecture-as-part-of-nextcloud-12/ |

</ref> | </ref> | ||

| − | This reference architecture is also incomplete because it does not address any of the related aspects of how you must integrate this architecture into your operations. It is obviously important that you must have a means to deploy the software onto the system. It is equally important that you configure, monitor and control the infrastructure to adjust over time. Even if the infrastructure | + | This reference architecture is also incomplete because it does not address any of the related aspects of how you must integrate this architecture into your operations. It is obviously important that you must have a means to deploy the software onto the system. It is equally important that you configure, monitor and control the infrastructure to adjust over time. Even if the infrastructure 'automatically' adjusts (fail-over, scale-up, scale-down), you need to be able to monitor and know how these systems are performing. If the software is at all developed or deployed internally, then you must also integrate the Development, Software Quality Assurance / Testing, and Release Management disciplines. We can take this even further to address things like how does the architecture enable you to migrate to various geographic locations ([https://wikitech.wikimedia.org/wiki/Switch_Datacenter switch data center]) or fail over in catastrophe. |

| Line 60: | Line 60: | ||

== Wikimedia Foundation == | == Wikimedia Foundation == | ||

| − | Looking at the Wikimedia | + | Looking at the Wikimedia Foundation's usage and implementation of technology gives us great insight about how to grow and scale to be a top ten Internet site, using commodity hardware plus free and open source infrastructure components. At VarnishCon 2016, Emanuele Rocca presents on running Wikipedia.org and details their operations engineering.<ref>https://upload.wikimedia.org/wikipedia/commons/d/d4/WMF_Traffic_Varnishcon_2016.pdf |

</ref> Aside from the architecture above, here is another representation of their Web request flow [https://upload.wikimedia.org/wikipedia/commons/5/51/Wikipedia_webrequest_flow_2015-10.png from October 2015] and [https://upload.wikimedia.org/wikipedia/commons/d/d8/Wikimedia-servers-2010-12-28.svg architecture from 2010] | </ref> Aside from the architecture above, here is another representation of their Web request flow [https://upload.wikimedia.org/wikipedia/commons/5/51/Wikipedia_webrequest_flow_2015-10.png from October 2015] and [https://upload.wikimedia.org/wikipedia/commons/d/d8/Wikimedia-servers-2010-12-28.svg architecture from 2010] | ||

| Line 71: | Line 71: | ||

== Data Persistence == | == Data Persistence == | ||

| − | + | It's the job of the persistence layer to store the data of your application. For MediaWiki, the old [https://github.com/wikimedia/cdb Constant Database] (CDB) wrapper around PHP's [https://secure.php.net/manual/en/book.dba.php native PHP DBA functions] (which provides a flat file store like the [https://en.wikipedia.org/wiki/Berkeley_DB Berkeley DB] style databases) is now replaced by simple PHP arrays which are file included. This allows the HHVM opcode cache to precompile and cache these data structures. In MediaWiki, it is used for the [https://www.mediawiki.org/wiki/Interwiki_cache interwiki cache], and the localization cache. This is not to say that you will want or need WMF interwiki list, but having a performant cache for the interwiki links contained in ''your'' wiki farm<ref>e.g. see https://freephile.org/w/api.php?action=query&meta=siteinfo&siprop=interwikimap</ref> is probably important. | |

Persistent data is stored in the following ways: | Persistent data is stored in the following ways: | ||

| Line 119: | Line 119: | ||

Require email for sign-up | Require email for sign-up | ||

| − | Instant Commons is a great feature if you want the millions of photos found at [https://commons.wikimedia.org/wiki/Main_Page Wikimedia Commons] available at your fingertips. If you | + | Instant Commons is a great feature if you want the millions of photos found at [https://commons.wikimedia.org/wiki/Main_Page Wikimedia Commons] available at your fingertips. If you don't, then you might still have your own collection of files/assets that you want to make available across your wiki farm. In that case, you want to use MediaWiki's federated file system which includes advanced configurations for [https://www.mediawiki.org/wiki/Manual:$wgLBFactoryConf Load Balanced] or local File repos<ref>[https://www.mediawiki.org/wiki/Manual:$wgForeignFileRepos $wgForeignFileRepos] |

</ref>. | </ref>. | ||

| Line 132: | Line 132: | ||

| − | Wiki best practices for cutting down spam. If your server is busy with spam bots, then real users | + | Wiki best practices for cutting down spam. If your server is busy with spam bots, then real users won't enjoy themselves. |

| Line 138: | Line 138: | ||

=== Security considerations === | === Security considerations === | ||

| − | You | + | You can't deploy an enterprise architecture without including security best practices in general; and the specific security practices relevant to your application. For example, make sure that users with the editinterface permission are trusted admins.<ref>https://www.mediawiki.org/wiki/Manual:Security |

</ref> | </ref> | ||

| Line 152: | Line 152: | ||

== Containers == | == Containers == | ||

| − | In order to scale, you not only need to have configuration management (etckeeper, git) and orchestration tools (Chef, Ansible, Puppet), but you need a way to package base or complete systems for easy reproducibility. The Docker or VirtualBox technologies allow you to do just that. So, for example, when you want to deploy a MariaDB Galera clustered solution for your database tier, you can use [https://github.com/instantlinux Rich Braun] | + | In order to scale, you not only need to have configuration management (etckeeper, git) and orchestration tools (Chef, Ansible, Puppet), but you need a way to package base or complete systems for easy reproducibility. The Docker or VirtualBox technologies allow you to do just that. So, for example, when you want to deploy a MariaDB Galera clustered solution for your database tier, you can use [https://github.com/instantlinux Rich Braun]'s docker script Instantlinux/mariadb-galera <ref>https://hub.docker.com/r/instantlinux/mariadb-galera/ |

</ref> | </ref> | ||

== Other Best Practices == | == Other Best Practices == | ||

| − | Use naming conventions in your infrastructure so that you know | + | Use naming conventions in your infrastructure so that you know what's what.<ref>https://wikitech.wikimedia.org/wiki/Infrastructure_naming_conventions |

</ref> | </ref> | ||

Latest revision as of 00:26, 1 June 2018

Introduction

In this paper we will look at the methods and architecture for serving a MediaWiki instance that is performant, scalable, and redundant. We'll also touch on the related operations that may be used to make a complete picture of the organization IT infrastructure. On the implementation side, we will be using the traditional GNU/Linux Free Software components: Linux, Apache, MySQL, PHP (LAMP), Squid/Varnish, LVS, Memcached, Nginx, etc. You won't need this information to run a single small wiki. But you will need this information if you aspire to provide a large-scale, performant, enterprise wiki.[1] One goal of this paper is to update the information at mw:Manual:MediaWiki architecture.

|

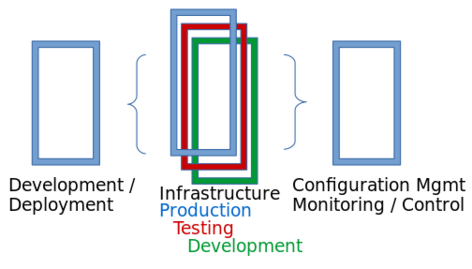

| Your infrastructure is dependent on Development Operations, Quality Assurance, Release Engineering and Product Deployment; plus Configuration Management, Monitoring and Control |

Overview

Start with Geographic Load Balancing[2], based on source IP of client resolver, to direct clients to the nearest server cluster[3]. Statically map IP addresses to countries to clusters.

Using TLS termination[4], you can secure your traffic, but reduce the overhead of HTTPS to just the proxy server.

HTTP reverse proxy caching implemented using Varnish[5] or Squid[6], grouped by text for wiki content and media for images and static files.

Caching everywhere. Most application data is cached in Memcached, a distributed object cache.

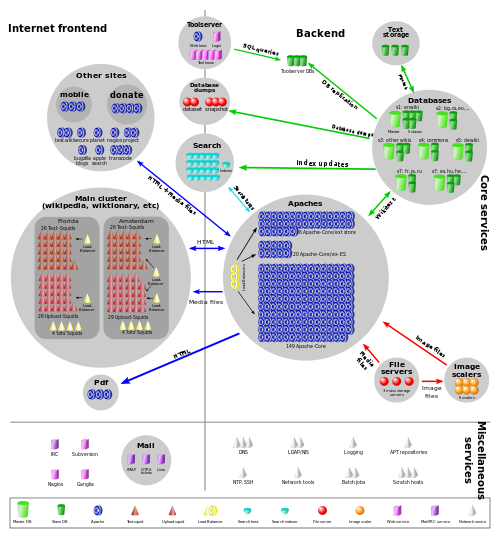

| MediaWiki Architecture |

Click for large view. Edit this diagram at [1] |

Analytics

In the diagram above, a lot of work is put into the analytics system. Substituting 3rd party analytics platform could simplify this architecture.

Reference Architecture

Amazon provides us with an example of a reference architecture[7] for Web Application Hosting[8] In the AWS reference implementation, there are 7 key points in the system:

- DNS Resolution

- Content Delivery Network (CDN)

- Static Content storage/server

- Load Balancing

- Web Servers and Application Servers

- Auto Scaling to grow and shrink #5

- Database backend with synchronous replication to standby

In a standard web application architecture, the incoming user traffic is distributed through load balancers to a number of application servers that run independent instances. These application servers access a shared storage, a shared database and a shared cache. This architecture scales well up to a 6 figure number of users. The application servers are easy to scale because doubling the number of servers doubles the performance.

But scalability limitations can be found in the shared components. These are the load balancers, the database, the storage and the cache.[9]

This reference architecture is also incomplete because it does not address any of the related aspects of how you must integrate this architecture into your operations. It is obviously important that you must have a means to deploy the software onto the system. It is equally important that you configure, monitor and control the infrastructure to adjust over time. Even if the infrastructure 'automatically' adjusts (fail-over, scale-up, scale-down), you need to be able to monitor and know how these systems are performing. If the software is at all developed or deployed internally, then you must also integrate the Development, Software Quality Assurance / Testing, and Release Management disciplines. We can take this even further to address things like how does the architecture enable you to migrate to various geographic locations (switch data center) or fail over in catastrophe.

Wikimedia Foundation

Looking at the Wikimedia Foundation's usage and implementation of technology gives us great insight about how to grow and scale to be a top ten Internet site, using commodity hardware plus free and open source infrastructure components. At VarnishCon 2016, Emanuele Rocca presents on running Wikipedia.org and details their operations engineering.[10] Aside from the architecture above, here is another representation of their Web request flow from October 2015 and architecture from 2010

Data Persistence

It's the job of the persistence layer to store the data of your application. For MediaWiki, the old Constant Database (CDB) wrapper around PHP's native PHP DBA functions (which provides a flat file store like the Berkeley DB style databases) is now replaced by simple PHP arrays which are file included. This allows the HHVM opcode cache to precompile and cache these data structures. In MediaWiki, it is used for the interwiki cache, and the localization cache. This is not to say that you will want or need WMF interwiki list, but having a performant cache for the interwiki links contained in your wiki farm[11] is probably important.

Persistent data is stored in the following ways:

- Metadata, such as article revision history, article relations (links, categories etc.), user accounts and settings are stored in the core databases

- Actual revision text is stored as blobs in External storage

- Static (uploaded) files, such as images, are stored separately on the image server - metadata (size, type, etc.) is cached in the core database and object caches

The core database is a separate database per wiki (not a separate server!). One master with many replicated slaves. Reads go to the slaves and write operations go to the master.

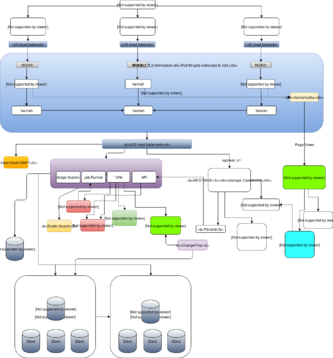

REST API and RESTBase

The MediaWiki REST API reached official v1 status in April 2017. The REST API is a way that you can access content of your wiki. RESTBase is a storing proxy for the API, so that read requests can be executed even faster, with lower latency and even less resource usage. The first use-case for REST API was speeding up Visual Editor.

| RESTBase uses Apache Cassandra for backend storage. |

See the announcement of the REST API on the WMF blog

Component Optimization

In the LAMP architecture, each component should be optimized in ways to achieve the goals of performance, reliability, scalability and continuity. One Apache example: you should move .htaccess rules into the VirtualHost configuration in order to eliminate a file read on every request.

For the PHP Interpreter, it is better to use the Hip Hop Virtual Machine (HHVM) developed at Facebook because it is not only faster than the Zend Engine, but also allows you to catch fatal errors to syslog.

Application-Specific Tuning

So you want to use a server like nginx for static assets, but which assets are static?

Configure MediaWiki to generate thumbnails on 404 rather than stat() each request.

MediaWiki scales well with multiple CPUs

3 levels caching (HTTP, PHP application (op-codes), Application data and parsing)

Elastic Search

Central Auth

Require email for sign-up

Instant Commons is a great feature if you want the millions of photos found at Wikimedia Commons available at your fingertips. If you don't, then you might still have your own collection of files/assets that you want to make available across your wiki farm. In that case, you want to use MediaWiki's federated file system which includes advanced configurations for Load Balanced or local File repos[12].

Application Configuration

MediaWiki global variables

The intl PECL extension is not available to handle Unicode normalization, falling back to slow pure-PHP implementation. If you run a high-traffic site, you should read a little on Unicode normalization.

Wiki best practices for cutting down spam. If your server is busy with spam bots, then real users won't enjoy themselves.

Security considerations

You can't deploy an enterprise architecture without including security best practices in general; and the specific security practices relevant to your application. For example, make sure that users with the editinterface permission are trusted admins.[13]

Deployments

A deployment to production should be scripted with orchestration tools, or scripts that can be peer-reviewed, tested, and re-used. The Wikimedia Foundation (WMF) operates some of the largest collaboratively edited reference projects in the world, including Wikipedia. Their infrastructure powers some of the most highly-trafficked sites on the web, serving content in over a hundred languages to more than half a billion people each month. They use Puppet to manage server configuration. This disciplined approach includes any project of significance. For example, see the Wikimedia Foundation git repository for their Datacenter Switch. Actually, the entire configuration of just about everything that the Wikimedia Foundation runs in their Network Operations Center is online.[14][15] Their software releases are managed with a tool built for the purpose: scap[16]

Containers

In order to scale, you not only need to have configuration management (etckeeper, git) and orchestration tools (Chef, Ansible, Puppet), but you need a way to package base or complete systems for easy reproducibility. The Docker or VirtualBox technologies allow you to do just that. So, for example, when you want to deploy a MariaDB Galera clustered solution for your database tier, you can use Rich Braun's docker script Instantlinux/mariadb-galera [17]

Other Best Practices

Use naming conventions in your infrastructure so that you know what's what.[18]

Colophon

This work is a draft, and will be updated and refined with input from all stakeholders.

References

- ↑ Mark Bergsma presented 'Wikimedia architecture' in 2008. http://www.haute-disponibilite.net/wp-content/uploads/2008/06/wikimedia-architecture.pdf Although the material is now dated, it provides a clear example of running an architecture that supports 3Gbits/s of data traffic and 30,000 HTTP requests/s on 350 commodity servers managed by 6 people.

- ↑ http://gdnsd.org/ gdnsd is an Authoritative-only DNS server which is used by CDNs and sites like Wikipedia.org to do geographic balancing at the DNS layer.

- ↑ https://github.com/wikimedia/operations-dns

- ↑ https://www.digitalocean.com/community/tutorials/how-to-set-up-nginx-load-balancing-with-ssl-termination

- ↑ https://www.varnish-cache.org/

- ↑ https://library.oreilly.com/book/9780596001629/squid-the-definitive-guide/toc

- ↑ https://aws.amazon.com/architecture/

- ↑ https://s3.amazonaws.com/awsmedia/architecturecenter/AWS_ac_ra_web_01.pdf

- ↑ Nextcloud introduces an architecture called 'Global Scale' that is designed to scale to hundreds of millions of users. https://nextcloud.com/blog/nextcloud-announces-global-scale-architecture-as-part-of-nextcloud-12/

- ↑ https://upload.wikimedia.org/wikipedia/commons/d/d4/WMF_Traffic_Varnishcon_2016.pdf

- ↑ e.g. see https://freephile.org/w/api.php?action=query&meta=siteinfo&siprop=interwikimap

- ↑ $wgForeignFileRepos

- ↑ https://www.mediawiki.org/wiki/Manual:Security

- ↑ https://noc.wikimedia.org/conf/

- ↑ https://phabricator.wikimedia.org/source/mediawiki-config/

- ↑ Scap diagram by MModell (WMF) - Own work, CC BY-SA 4.0, https://commons.wikimedia.org/w/index.php?curid=39343890

- ↑ https://hub.docker.com/r/instantlinux/mariadb-galera/

- ↑ https://wikitech.wikimedia.org/wiki/Infrastructure_naming_conventions