Difference between revisions of "Docker"

(→Docker in Docker: more security focus) |

|||

| (15 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | Linux containers (LXC)<ref>https://help.ubuntu.com/lts/serverguide/lxc.html</ref> technology has taken off with Docker https://www.docker.com/ <ref>[http://opensource.com/business/14/7/interview-j%C3%A9r%C3%B4me-petazzoni-docker See the interview on opensource.com]</ref> <ref>more info from Wikipedia [[wp:Docker_(software)]]</ref> which was released as open source in March 2013. RedHat and others have collaborated with the corporate backer to the technology seemingly to compete with Canonical's JuJu https://juju.ubuntu.com/ and Charm technology which also is based on Linux containers. Linux containers are built into the linux kernel, and so offer a lightweight native method of virtualization compared to more traditional (heavyweight) virtualization techniques like VMWare, Vagrant, VirtualBox. | + | [[File:Docker-architecture.svg|thumb|right|Docker Architecture|link=https://docs.docker.com/get-started/overview/#docker-architecture]] |

| + | Linux containers (LXC)<ref>https://help.ubuntu.com/lts/serverguide/lxc.html</ref> technology has taken off with Docker https://www.docker.com/ <ref>[http://opensource.com/business/14/7/interview-j%C3%A9r%C3%B4me-petazzoni-docker See the interview on opensource.com]</ref> <ref>more info from Wikipedia [[wp:Docker_(software)]]</ref> which was released as open source in March 2013. RedHat and others have collaborated with the corporate backer to the technology seemingly to compete with Canonical's JuJu https://juju.ubuntu.com/ and Charm technology which also is based on Linux containers. Linux containers are built into the linux kernel, and so offer a lightweight native method of virtualization compared to more traditional (heavyweight) virtualization techniques like [[VMWare]], [[Vagrant]], [[VirtualBox]]. | ||

| − | Essentially, the difference is the hypervisor and OS. Whereas containers are implemented with kernel features like namespaces, cgroups and chroots, a full VM requires a hypervisor plus an operating system in the VM. | + | Essentially, the difference is the hypervisor and OS. Whereas containers are implemented with kernel features like namespaces, cgroups and chroots, a full VM requires a hypervisor plus an operating system in the VM. Docker runs a [https://docs.docker.com/get-started/overview/#docker-architecture docker daemon] on the Docker Host. (In comparison, [[Podman]] offers a daemon-less technique focused on the parent process - using a fork and exec model.) |

| − | |||

| − | + | It is important to realize that Docker is inherently a '''Linux''' technology. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | [https://docs.docker.com/engine/ Docker Engine] is an open source containerization technology for building and containerizing your applications. Docker Engine acts as a client-server application with: | |

| − | * | + | *A server with a long-running daemon process <code>dockerd</code>. |

| − | * | + | *APIs which specify interfaces that programs can use to talk to and instruct the Docker daemon. |

| − | * | + | *A command line interface (CLI) client <code>docker</code>. |

| − | |||

| − | |||

| − | + | The CLI uses Docker APIs to control or interact with the Docker daemon through scripting or direct CLI commands. Many other Docker applications use the underlying API and CLI. The daemon creates and manage Docker objects, such as images, containers, networks, and volumes. | |

| − | + | For more details, see [https://docs.docker.com/get-started/overview/#docker-architecture Docker Architecture].{{#ev:youtube|YFl2mCHdv24|480|right}} | |

| + | {{#ev:youtube|Qw9zlE3t8Ko|480|right}} | ||

| + | *https://docs.docker.com/develop/ | ||

| + | *https://docs.docker.com/engine/ | ||

| + | *https://docs.docker.com/engine/install/ | ||

| − | == | + | ==Volumes and Mounts== |

| − | https://docs.docker.com/engine/ | + | From [https://docs.docker.com/storage/ Data management in Docker]<blockquote>By default all files created inside a container are stored on a writable container layer. This means that: |

| − | + | ||

| + | The data doesn’t persist when that container no longer exists, and it can be difficult to get the data out of the container if another process needs it. | ||

| + | |||

| + | A container’s writable layer is tightly coupled to the host machine where the container is running. You can’t easily move the data somewhere else. | ||

| + | |||

| + | Writing into a container’s writable layer requires a storage driver to manage the filesystem. The storage driver provides a union filesystem, using the Linux kernel. This extra abstraction reduces performance as compared to using data volumes, which write directly to the host filesystem. | ||

| + | |||

| + | Docker has two options for containers to store files on the host machine, so that the files are persisted even after the container stops: volumes, and bind mounts. | ||

| + | |||

| + | Docker also supports containers storing files in-memory on the host machine. Such files are not persisted. If you’re running Docker on Linux, tmpfs mount is used to store files in the host’s system memory. If you’re running Docker on Windows, named pipe is used to store files in the host’s system memory.</blockquote><br /> | ||

| + | |||

| + | That is all you need to know<ref>Not really. In practice you need to fully understand how the volumes and mounts work to avoid very common pitfalls like the [https://www.joyfulbikeshedding.com/blog/2021-03-15-docker-and-the-host-filesystem-owner-matching-problem.html '''host filesystem owner matching problem'''] In a nutshell, the best approach is to run your container with a UID/GID that matches the host's UID/GID. It can be hard to implement while addressing all caveats. Hongli Lai [https://www.joyfulbikeshedding.com/blog/2023-04-20-cure-docker-volume-permission-pains-with-matchhostfsowner.html wrote a tool to solve this] ([https://github.com/FooBarWidget/matchhostfsowner MatchHostFSOwner])</ref> about Docker when it comes to sharing files between the host and the container. | ||

| + | |||

| + | Volumes are the preferred way to [https://docs.docker.com/get-started/05_persisting_data/ persist data in Docker containers] and services. | ||

| + | |||

| + | Bind mounts are a way to share a directory from the host (typically your app source code) into a running container and have the container respond immediately to changes made to files on the host. If your containerized app is a [[nodejs]] app, and needs to be restarted to "see" changes, you can wrap <code>node</code> with [https://www.npmjs.com/package/nodemon nodemon] which monitors files for changes and restarts the node app based on those files. PHP apps execute the newest code on each request (via the web server) and so do not require any special wrapper. | ||

| + | |||

| + | |||

| + | Note: '''Docker Desktop''' is simply a fancy GUI '''client''' application that uses virtualization (a Linux Virtual Machine) to bundle the Docker Engine into your host OS. | ||

| + | |||

| + | *Volumes are the right choice when your application requires fully native file system behavior on Docker Desktop. For example, a database engine requires precise control over disk flushing to guarantee transaction durability. Volumes are stored in the Linux VM and can make these guarantees, whereas bind mounts are remoted to macOS or Windows OS, where the file systems behave slightly differently. | ||

| + | |||

| + | |||

| + | |||

| + | When you use either bind mounts or volumes, keep the following in mind: | ||

| + | |||

| + | *If you mount an '''empty volume''' into a directory in the container in which files or directories exist, these files or directories are propagated (copied) into the volume. Similarly, if you start a container and specify a volume which does not already exist, an empty volume is created for you. This is a good way to pre-populate data that another container needs. | ||

| + | *If you mount a '''bind mount or non-empty volume''' into a directory in the container in which some files or directories exist, these files or directories are obscured by the mount, just as if you saved files into <code>/mnt</code> on a Linux host and then mounted a USB drive into <code>/mnt</code>. The contents of <code>/mnt</code> would be obscured by the contents of the USB drive until the USB drive were unmounted. The obscured files are not removed or altered, but are not accessible while the bind mount or volume is mounted. | ||

| + | |||

| + | ==VSCode with Docker== | ||

| + | Each time you create a Docker container, and connect VSCode to that container, VSCode installs a server into the container so that it can communicate with it. So, there is a small (a couple minutes?) delay while this happens before you can edit or do anything. Best to stop a container and only re-build it when necessary to avoid the delay. | ||

| + | ==Docker Images== | ||

| + | Bitnami has a [https://github.com/bitnami/bitnami-docker-mediawiki Docker Image for MediaWiki] Don't use Bitnami. You will thank me later. | ||

| + | |||

| + | ==Security== | ||

| + | Docker apparently doesn't respect your host firewall by default - leading to the potential for a gaping security hole. This has been a [https://github.com/docker/for-linux/issues/690 reported bug since 2018]. One fix is to [https://www.smarthomebeginner.com/traefik-docker-security-best-practices/#10_Change_DOCKER_OPTS_to_Respect_IP_Table_Firewall set the DOCKER_OPTS] configuration parameter. Another is to add a jump rule to UFW. The bug report links to docs and multiple references. | ||

| + | |||

| + | == Docker Downsides == | ||

| + | One major negative to the system architecture of Docker is that it relies on a server daemon. **Unlike** [[Podman]], Docker's Engine can use up 4GB of RAM just sitting idle. | ||

| + | A similar thing happens with WSL2 on Windows <ref>https://news.ycombinator.com/item?id=26897095</ref> | ||

| + | |||

| + | == Future Reading == | ||

| + | |||

| + | # The compose application model https://docs.docker.com/compose/compose-file/02-model/ | ||

| + | # Understand how moby [https://github.com/moby/buildkit buildkit] is integrated with [https://github.com/docker/buildx buildx] (or docker) and use it. | ||

| + | # Interesting read about docker commit https://adamtheautomator.com/docker-commit/ | ||

| + | |||

| + | Inspect your running container based on it's container name: docker inspect $(docker container ls | awk '/app2/ {print $1}') | ||

| + | |||

| + | == Docker in Docker == | ||

| + | Before you get 'fancy' with Docker, be sure to read and understand the Security best practices for Docker | ||

| + | https://cheatsheetseries.owasp.org/cheatsheets/Docker_Security_Cheat_Sheet.html | ||

| + | |||

| + | Containers (unlike virtual machines) share the kernel with the host, therefore kernel exploits executed inside a container will directly hit the host kernel. For example, a kernel privilege escalation exploit ([https://github.com/scumjr/dirtycow-vdso like Dirty COW]) executed inside a well-insulated container will result in root access in the host. | ||

| + | |||

| + | That said, https://devopscube.com/run-docker-in-docker/ presents several use cases and techniques for DinD. | ||

| + | |||

| + | === Docker In Docker === | ||

| + | The DinD method is '''Docker inside Docker'''. Docker provides a special Docker container with the <tt>dind</tt> tag which is pre-configured to run Docker inside the container image. | ||

| + | |||

| + | An example of Docker In Docker would be running Docker inside a Docker image on Windows Subsystem for Linux (WSL) | ||

| + | |||

| + | # Install Docker Desktop for Windows. | ||

| + | # Enable the WSL 2 backend in Docker Desktop settings. | ||

| + | # Set WSL 2 as your default version: <code>wsl --set-default-version 2</code> in PowerShell. | ||

| + | # Install a Linux distribution from the Microsoft Store (Ubuntu, for example). | ||

| + | # Open your Linux distribution (WSL 2 instance), and install Docker. | ||

| + | # Add your user to the Docker group to manage Docker as a non-root user: <code>sudo usermod -aG docker $USER</code>. | ||

| + | # Test Docker with: <code>docker run hello-world</code>. | ||

| + | |||

| + | Running Docker inside Docker can lead to some security and functionality issues. It's often better to use the Docker daemon of the host machine. You can do this by mounting the Docker socket from the host into the container. See the "Docker outside of Docker" section. | ||

| + | |||

| + | === Docker outside of Docker === | ||

| + | The DooD method, '''Docker outside of Docker,''' uses the docker socket of the host system from inside containers by mounting the host socket into the filesystem of the container. | ||

| + | |||

| + | Try curl to see how different processes can communicate through a socket on the same host: <syntaxhighlight lang="bash"> | ||

| + | curl --unix-socket /var/run/docker.sock http://localhost/version | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | While there are benefits to using the DooD method, you are giving your containers full control over the Docker daemon, which effectively is root on the host system. To mitigate these risks, again refer to [https://docs.docker.com/engine/security/ the Security model of Docker], the [https://cheatsheetseries.owasp.org/cheatsheets/Docker_Security_Cheat_Sheet.html Docker Security Cheat Sheet from OWASP] and be sure to run your Docker daemon as an unprivileged user. | ||

| + | |||

| + | |||

| + | === For security, use Nestybox Sysbox runtime === | ||

| + | See https://github.com/nestybox/sysbox<nowiki/> | ||

{{References}} | {{References}} | ||

| Line 36: | Line 114: | ||

[[Category:Virtualization]] | [[Category:Virtualization]] | ||

[[Category:DevOps]] | [[Category:DevOps]] | ||

| + | [[Category:Kubernetes]] | ||

Latest revision as of 17:12, 19 December 2023

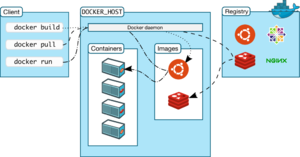

Linux containers (LXC)[1] technology has taken off with Docker https://www.docker.com/ [2][3] which was released as open source in March 2013. RedHat and others have collaborated with the corporate backer to the technology seemingly to compete with Canonical's JuJu https://juju.ubuntu.com/ and Charm technology which also is based on Linux containers. Linux containers are built into the linux kernel, and so offer a lightweight native method of virtualization compared to more traditional (heavyweight) virtualization techniques like VMWare, Vagrant, VirtualBox.

Essentially, the difference is the hypervisor and OS. Whereas containers are implemented with kernel features like namespaces, cgroups and chroots, a full VM requires a hypervisor plus an operating system in the VM. Docker runs a docker daemon on the Docker Host. (In comparison, Podman offers a daemon-less technique focused on the parent process - using a fork and exec model.)

It is important to realize that Docker is inherently a Linux technology.

Docker Engine is an open source containerization technology for building and containerizing your applications. Docker Engine acts as a client-server application with:

- A server with a long-running daemon process

dockerd. - APIs which specify interfaces that programs can use to talk to and instruct the Docker daemon.

- A command line interface (CLI) client

docker.

The CLI uses Docker APIs to control or interact with the Docker daemon through scripting or direct CLI commands. Many other Docker applications use the underlying API and CLI. The daemon creates and manage Docker objects, such as images, containers, networks, and volumes.

For more details, see Docker Architecture.

- https://docs.docker.com/develop/

- https://docs.docker.com/engine/

- https://docs.docker.com/engine/install/

Contents

Volumes and Mounts[edit | edit source]

From Data management in Docker

By default all files created inside a container are stored on a writable container layer. This means that:

The data doesn’t persist when that container no longer exists, and it can be difficult to get the data out of the container if another process needs it.

A container’s writable layer is tightly coupled to the host machine where the container is running. You can’t easily move the data somewhere else.

Writing into a container’s writable layer requires a storage driver to manage the filesystem. The storage driver provides a union filesystem, using the Linux kernel. This extra abstraction reduces performance as compared to using data volumes, which write directly to the host filesystem.

Docker has two options for containers to store files on the host machine, so that the files are persisted even after the container stops: volumes, and bind mounts.

Docker also supports containers storing files in-memory on the host machine. Such files are not persisted. If you’re running Docker on Linux, tmpfs mount is used to store files in the host’s system memory. If you’re running Docker on Windows, named pipe is used to store files in the host’s system memory.

That is all you need to know[4] about Docker when it comes to sharing files between the host and the container.

Volumes are the preferred way to persist data in Docker containers and services.

Bind mounts are a way to share a directory from the host (typically your app source code) into a running container and have the container respond immediately to changes made to files on the host. If your containerized app is a nodejs app, and needs to be restarted to "see" changes, you can wrap node with nodemon which monitors files for changes and restarts the node app based on those files. PHP apps execute the newest code on each request (via the web server) and so do not require any special wrapper.

Note: Docker Desktop is simply a fancy GUI client application that uses virtualization (a Linux Virtual Machine) to bundle the Docker Engine into your host OS.

- Volumes are the right choice when your application requires fully native file system behavior on Docker Desktop. For example, a database engine requires precise control over disk flushing to guarantee transaction durability. Volumes are stored in the Linux VM and can make these guarantees, whereas bind mounts are remoted to macOS or Windows OS, where the file systems behave slightly differently.

When you use either bind mounts or volumes, keep the following in mind:

- If you mount an empty volume into a directory in the container in which files or directories exist, these files or directories are propagated (copied) into the volume. Similarly, if you start a container and specify a volume which does not already exist, an empty volume is created for you. This is a good way to pre-populate data that another container needs.

- If you mount a bind mount or non-empty volume into a directory in the container in which some files or directories exist, these files or directories are obscured by the mount, just as if you saved files into

/mnton a Linux host and then mounted a USB drive into/mnt. The contents of/mntwould be obscured by the contents of the USB drive until the USB drive were unmounted. The obscured files are not removed or altered, but are not accessible while the bind mount or volume is mounted.

VSCode with Docker[edit | edit source]

Each time you create a Docker container, and connect VSCode to that container, VSCode installs a server into the container so that it can communicate with it. So, there is a small (a couple minutes?) delay while this happens before you can edit or do anything. Best to stop a container and only re-build it when necessary to avoid the delay.

Docker Images[edit | edit source]

Bitnami has a Docker Image for MediaWiki Don't use Bitnami. You will thank me later.

Security[edit | edit source]

Docker apparently doesn't respect your host firewall by default - leading to the potential for a gaping security hole. This has been a reported bug since 2018. One fix is to set the DOCKER_OPTS configuration parameter. Another is to add a jump rule to UFW. The bug report links to docs and multiple references.

Docker Downsides[edit | edit source]

One major negative to the system architecture of Docker is that it relies on a server daemon. **Unlike** Podman, Docker's Engine can use up 4GB of RAM just sitting idle. A similar thing happens with WSL2 on Windows [5]

Future Reading[edit | edit source]

- The compose application model https://docs.docker.com/compose/compose-file/02-model/

- Understand how moby buildkit is integrated with buildx (or docker) and use it.

- Interesting read about docker commit https://adamtheautomator.com/docker-commit/

Inspect your running container based on it's container name: docker inspect $(docker container ls | awk '/app2/ {print $1}')

Docker in Docker[edit | edit source]

Before you get 'fancy' with Docker, be sure to read and understand the Security best practices for Docker https://cheatsheetseries.owasp.org/cheatsheets/Docker_Security_Cheat_Sheet.html

Containers (unlike virtual machines) share the kernel with the host, therefore kernel exploits executed inside a container will directly hit the host kernel. For example, a kernel privilege escalation exploit (like Dirty COW) executed inside a well-insulated container will result in root access in the host.

That said, https://devopscube.com/run-docker-in-docker/ presents several use cases and techniques for DinD.

Docker In Docker[edit | edit source]

The DinD method is Docker inside Docker. Docker provides a special Docker container with the dind tag which is pre-configured to run Docker inside the container image.

An example of Docker In Docker would be running Docker inside a Docker image on Windows Subsystem for Linux (WSL)

- Install Docker Desktop for Windows.

- Enable the WSL 2 backend in Docker Desktop settings.

- Set WSL 2 as your default version:

wsl --set-default-version 2in PowerShell. - Install a Linux distribution from the Microsoft Store (Ubuntu, for example).

- Open your Linux distribution (WSL 2 instance), and install Docker.

- Add your user to the Docker group to manage Docker as a non-root user:

sudo usermod -aG docker $USER. - Test Docker with:

docker run hello-world.

Running Docker inside Docker can lead to some security and functionality issues. It's often better to use the Docker daemon of the host machine. You can do this by mounting the Docker socket from the host into the container. See the "Docker outside of Docker" section.

Docker outside of Docker[edit | edit source]

The DooD method, Docker outside of Docker, uses the docker socket of the host system from inside containers by mounting the host socket into the filesystem of the container.

Try curl to see how different processes can communicate through a socket on the same host:

curl --unix-socket /var/run/docker.sock http://localhost/version

While there are benefits to using the DooD method, you are giving your containers full control over the Docker daemon, which effectively is root on the host system. To mitigate these risks, again refer to the Security model of Docker, the Docker Security Cheat Sheet from OWASP and be sure to run your Docker daemon as an unprivileged user.

For security, use Nestybox Sysbox runtime[edit | edit source]

See https://github.com/nestybox/sysbox

References[edit source]

- ↑ https://help.ubuntu.com/lts/serverguide/lxc.html

- ↑ See the interview on opensource.com

- ↑ more info from Wikipedia wp:Docker_(software)

- ↑ Not really. In practice you need to fully understand how the volumes and mounts work to avoid very common pitfalls like the host filesystem owner matching problem In a nutshell, the best approach is to run your container with a UID/GID that matches the host's UID/GID. It can be hard to implement while addressing all caveats. Hongli Lai wrote a tool to solve this (MatchHostFSOwner)

- ↑ https://news.ycombinator.com/item?id=26897095