Difference between revisions of "Backups"

| (2 intermediate revisions by the same user not shown) | |||

| Line 7: | Line 7: | ||

<li><strong>File</strong>: "Full" file system archive to online storage before re-building a host (completely reformatting disks and partitions)</li> | <li><strong>File</strong>: "Full" file system archive to online storage before re-building a host (completely reformatting disks and partitions)</li> | ||

<li><strong>System Image</strong>: A complete operating system image to allow either [[cloning]] to new hardware, or full system restoration</li> | <li><strong>System Image</strong>: A complete operating system image to allow either [[cloning]] to new hardware, or full system restoration</li> | ||

| + | <li><strong>Database</strong>: See [[Mysqldump]] | ||

</ol> | </ol> | ||

== Example Use Cases == | == Example Use Cases == | ||

| Line 152: | Line 153: | ||

# and also on Linux, a SIGINFO signal to the process id of a dd process will show you the status, and then continue. | # and also on Linux, a SIGINFO signal to the process id of a dd process will show you the status, and then continue. | ||

kill -USR1 $(pidof dd) | kill -USR1 $(pidof dd) | ||

| + | |||

| + | # a local copy is MUCH faster than a network copy -- getting 17 MB/s instead of ~400 kB/s | ||

| + | sudo dd if=/dev/sda5 bs=4096 conv=noerror of=/media/disk-a/backups/sheila-laptop/acer.image.5 | ||

</source> | </source> | ||

| Line 158: | Line 162: | ||

Backup up ~60 GB of data across a USB 1 interface using rsync and -z for compression will take over 1 day. In fact it will take over 10 minutes to generate the file list. My experience was with 874,490 files. | Backup up ~60 GB of data across a USB 1 interface using rsync and -z for compression will take over 1 day. In fact it will take over 10 minutes to generate the file list. My experience was with 874,490 files. | ||

| + | |||

| + | Similarly, if you are backing up across a network, then you can get dramatically slower results than if the source and target are local. For example, in my most recent backup, I was able to copy 18GB over a wireless network but it took 43,712 seconds (over 12 hours). 19GB over a local USB2 interface took 1,037 seconds (17 minutes). | ||

In most backup scenarios, the first backup is the one that takes the most time. | In most backup scenarios, the first backup is the one that takes the most time. | ||

Latest revision as of 14:32, 10 June 2016

Backups aren't worth anything unless you know how to restore from them, and you've tested the restore procedure to prove your backups are good. What's more, you'll gain that much more clarity about your backups. Sometimes, you don't have backups but you lose data and you're faced with the daunting task of restoring from corrupted media or deleted files. Either way, once disaster strikes, you'll want good information on how to Restore.

Contents

Types of Backups[edit | edit source]

There are three primary use cases or types of backups:

- Operational: Routine (e.g. daily) backups - especially in a networked multi-user environment for operational resiliency and efficiency

- File: "Full" file system archive to online storage before re-building a host (completely reformatting disks and partitions)

- System Image: A complete operating system image to allow either cloning to new hardware, or full system restoration

- Database: See Mysqldump

Example Use Cases[edit | edit source]

- I accidentally deleted a file, and I want to get it back (without having to call Tech Services) and if I can't get my file back, I want to blame tech services

- I want to make an online (meaning network accessible) archive of my old machine before I wipe it completely to turn it into a Media Center PC (Using Mythbuntu or LinuxMCE)

- I want to making a full system backup of my notebook before I do a distribution upgrade from Kubuntu 8.4 to Kubuntu 8.10 -- just in case the upgrade doesn't go smoothly so that I can revert and start again, or revert and work on resolving blockers.

Given the different goals of what one might mean by the term "backup", there are naturally different solutions that work best in each scenario. If you are a technical user, you must get familiar with RSync. Even when you need to employ other systems or tools, RSync is the swiss army knife of backups.

Operational Backups[edit | edit source]

Scenario A (Operational Backups) is very much like Scenario B (File Backups), with the distinction that it obviously multiplies the number of machines included, and is meant to persist through time as a component of your organization's operations and disaster recovery plans. You may effectively use these techniques in your home or home office just as much as they benefit businesses.

Tools[edit | edit source]

You could use RSync here, but by the time you get into the complexity of operational backups, you probably want to employ an application that offers even more flexibility, administration and power.

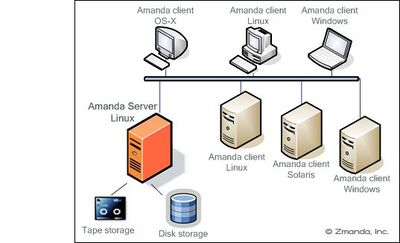

Amanda[edit | edit source]

One of, if not the best, solutions for operational backups is Amanda. Amanda is both an open source project, and commercially supported through the Zmanda corporation.

Resources[edit | edit source]

- wp:Category:Free_backup_software The most interesting are

- http://en.wikipedia.org/wiki/BackupPC

- http://en.wikipedia.org/wiki/Bacula (which is mostly comparable to Amanda - but uses a non-standard albeit open format)

- http://en.wikipedia.org/wiki/FlyBack which is a nice UI on rsync and aims to mimick the Mac TimeMachine

- You're familiar with http://en.wikipedia.org/wiki/Rsync by now right?

- TimeVault simplifies data backup for Ubuntu TimeVault is only beta at this point.

File Backups[edit | edit source]

This could also be called "Application Backups", because oftentimes, the goal in this category is to make a backup of an application prior to making major changes to the application, such as an upgrade. Or, it could be close to copying every file on a particular computer to some backup location. One distinction that I think characterizes "file backups" compared with "operational backups" is duration. If the backup is motivated by the thinking "Let's put this somewhere where we can still get at it for a while but we'll eventually throw away the backup when we're sure we have everything we need moved or migrated" This is a file backup. You still could be backing up nearly a full operating system, but you're probably not interested in applications as much as their settings and configurations. In many "File Backups" scenarios, you have a specific set of files that you're targeting. For example, "We're going to do a full upgrade of the website, so let's create a backup of the site before the upgrade." Another distinction is who is doing the backup. I consider "File backups" to be initiated by the user (even if that user is a system administrator, developer or database administrator) whereas Operational Backups "just happen" (with a Technology Services or other operations team responsible for making them happen).

Example 1: Archive the old workstation[edit | edit source]

These are the disks that I have to preserve by copying the good, throwing away the bad.

greg@liberty:~$ sudo fdisk -l Disk /dev/hda: 40.0 GB, 40000020480 bytes 255 heads, 63 sectors/track, 4863 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System /dev/hda1 * 1 2550 20482843+ 7 HPFS/NTFS /dev/hda2 2551 2633 666697+ 7 HPFS/NTFS /dev/hda3 2634 2646 104422+ 83 Linux /dev/hda4 2647 4863 17808052+ f W95 Ext'd (LBA) /dev/hda5 2647 4668 16241683+ 83 Linux /dev/hda6 4669 4863 1566306 82 Linux swap / Solaris Disk /dev/hdb: 81.9 GB, 81964302336 bytes 16 heads, 63 sectors/track, 158816 cylinders Units = cylinders of 1008 * 512 = 516096 bytes Device Boot Start End Blocks Id System /dev/hdb1 1 158816 80043232+ 83 Linux

One of the first tasks at hand was to mount my old Windows drive in a way that made it accessible to me (as opposed to read-only to root) Learn more about this at https://help.ubuntu.com/community/AutomaticallyMountPartitions

the file /etc/fstab sets up your file system devices and has started using universally unique identifiers to avoid problems with pluggable external disks. |

You can learn the identifier with the command:

sudo vol_id -u /dev/sda1

But it turns out there is a simpler way of finding out what the volume id is for a disk drive:

ls -l /dev/disk/by-uuid/

total 0

lrwxrwxrwx 1 root root 10 2008-10-21 09:32 05226929-bdde-4a46-af85-01b40827a1f4 -> ../../sda5

lrwxrwxrwx 1 root root 10 2008-10-21 09:32 8e46d1ff-5f34-46b1-a51a-0dac169123b7 -> ../../sdb1

lrwxrwxrwx 1 root root 10 2008-10-21 09:32 c82c1eb4-439c-4982-8764-ac207d4f9622 -> ../../sda1

</source

<source lang="bash">

cat /proc/filesystems

shows you what file system types are supported under your currently running kernel

So if you've identified the disks you want to backup, and mounted them, you also need to be aware of the parts you DO NOT want to backup. On a Linux system, the list of EXCLUSIONS would include

- /proc/

- /tmp/

- /sys/

sudo rsync -ravlHz --progress --stats --exclude=/mnt/usbdrive/* --exclude=tmp*** --exclude=/proc/** --exclude=/sys/** --cvs-exclude --dry-run / /mnt/usbdrive/backups/liberty

I added the --dry-run option in there because you should always test first, and because I do not want anyone blindly copy and pasting this command without testing and tweaking it.

System Image aka Backups for Disaster Recovery[edit | edit source]

So, when it came to using a backup program, I chose Mondo Rescue because it can backup to CD, DVD, disk (e.g. external USB drive) or other mediums like tape drives. Plus, it can create a restore disk for you which is what I want... in the case of catastrophic system failure, I want to be able to recreate my computer on new hardware.

I have an external USB hard drive with a terabyte of storage that I have mounted at /media/disk

This is the command that I used to create a full system backup of my laptop hard drive to my external USB drive:

mondoarchive \

-OV # do a backup, and verify \

-p greg-laptop # prefix backup files with this \

-i # Use ISO files (CD images) as backup media \

-I / # include from root (default) \

-N # exclude all mounted network filesystems \

-d /media/disk/backups # write ISOs to this directory \

-s 4420m # make the ISOs 4,420 MB is size (smaller than a DVD) \

-S /media/disk/tmp # write scratch files to this directory

-T /media/disk/tmp # write temporary files to this directory

At first, the backup failed with a message that it thought my drive was full. But in reality, it was a problem with the tmp partition being too small so then I added the -S and -T options and it worked fine.

This is what mondoarchive said after I used the -T and -S options to do the run

Call to mkisofs to make ISO (ISO #18) ...OK Please reboot from the 1st ISO in Compare Mode, as a precaution. Done. Done. Writing boot+data floppy images to disk No Imgs ---promptpopup---1--- No regular Boot+data floppies were created due of space constraints. However, you can burn /var/cache/mindi/mondorescue.iso to a CD and boot from that. ---promptpopup---Q--- [OK] --- --> Backup and/or verify ran to completion. However, errors did occur. /var/cache/mindi/mondorescue.iso, a boot/utility CD, is available if you want it Data archived OK. Errors occurred during backup. Please check logfile. See /var/log/mondoarchive.log for details of backup run.

Basically, I have to "reduce your kernel's size" if I want to create a boot floppy, but it doesn't matter if I want to use a boot ISO instead.

Disk Image for re-deploying a drive/computer[edit | edit source]

On a more recent "backup" effort, I needed to archive off the contents of a laptop to an external USB drive that was mounted on another system. The source system was a Windows XP machine, while the target machine was running Linux. The tools I used to make the backup were dd[1] and a LiveCD of the Linux Mint distribution. By inserting the Linux Mint Live CD, and rebooting the laptop, I would have access to a bash shell that I could then run the dd tool from. Not only that, but I could use the Secure Shell to pipe the command over the network to the target machine's mounted external 1TB drive. Note that in my case, I used a private key (identity file) to authenticate my ssh session.

sudo dd if=/dev/sda2 | ssh -i /home/mint/id_rsa-greg-notebook greg@192.168.1.11 "dd of=/media/disk-a/backups/sheila-laptop/acer.image.2"

# to "watch" the progress

watch ls -al /media/disk-a/backups/sheila-laptop/

# and also on Linux, a SIGINFO signal to the process id of a dd process will show you the status, and then continue.

kill -USR1 $(pidof dd)

# a local copy is MUCH faster than a network copy -- getting 17 MB/s instead of ~400 kB/s

sudo dd if=/dev/sda5 bs=4096 conv=noerror of=/media/disk-a/backups/sheila-laptop/acer.image.5

Performance[edit | edit source]

Backing up ~94 GB with MondoRescue to image files with medium compression could still generate around 20 4GB DVD images. That is a lot of data and will take a significant amount of time.

Backup up ~60 GB of data across a USB 1 interface using rsync and -z for compression will take over 1 day. In fact it will take over 10 minutes to generate the file list. My experience was with 874,490 files.

Similarly, if you are backing up across a network, then you can get dramatically slower results than if the source and target are local. For example, in my most recent backup, I was able to copy 18GB over a wireless network but it took 43,712 seconds (over 12 hours). 19GB over a local USB2 interface took 1,037 seconds (17 minutes).

In most backup scenarios, the first backup is the one that takes the most time.